Concepts

Before using the software, understanding the following concepts will help you better utilize its features.

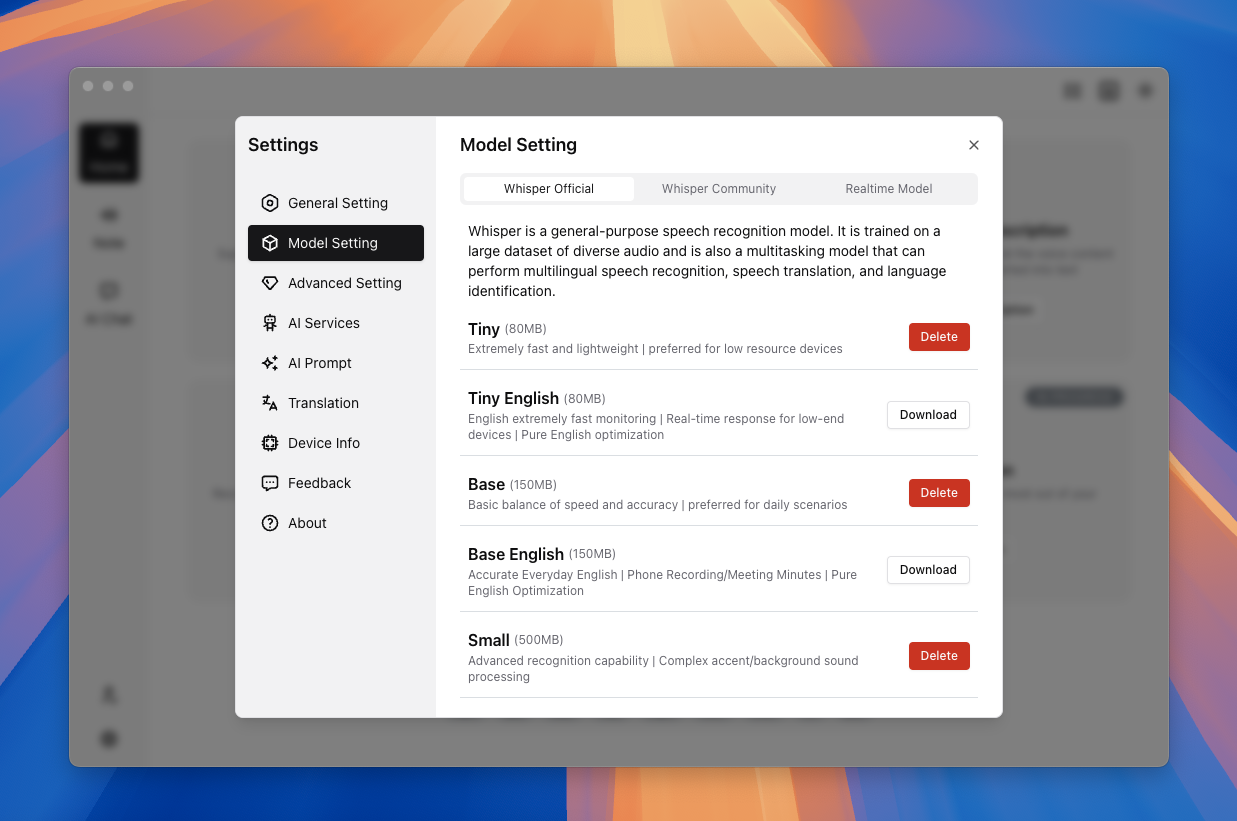

Audio Note supports not only the official Whisper models but also community models and real-time models. You can choose the appropriate model based on your device and usage scenario.

What are Whisper Models?

Whisper is a series of speech recognition models developed by OpenAI, capable of converting speech to text and supporting multiple languages and translation functions.

The models are divided into different versions based on parameter size and performance:

- Tiny

- Base

- Small

- Medium

- Large-v2

- Large-v3

- Turbo-v3-Turbo

Tiny, Base, Small, and Medium all have corresponding English-only models. If you only need to recognize English and your device has limited performance, you can use the English-only models to balance transcription quality and performance.

Official Whisper Models

These models are divided into different versions based on parameter size and performance to accommodate different devices and usage scenarios.

Tiny Model

The Tiny model is the smallest in the Whisper series with 39M parameters. It is designed for low-performance devices such as mobile devices or embedded systems. Despite its small size, it can still perform basic speech recognition tasks.

Advantages:

- Small size, low resource consumption, suitable for low-performance devices.

- Fast inference speed, suitable for scenarios with high real-time requirements.

- Low energy consumption, suitable for battery-powered devices.

The disadvantage is that due to the small number of parameters, its performance in complex speech recognition and multilingual support is limited.

Base Model

The Base model has 74M parameters, offering improved performance over the Tiny model, suitable for scenarios requiring moderate accuracy.

Advantages:

- Provides higher recognition accuracy while maintaining a small size.

- Suitable for devices with limited resources but requiring certain precision.

- Supports multilingual recognition and translation functions.

Small Model

The Small model has 244M parameters, a medium-sized model suitable for moderately powerful devices and most daily tasks.

Advantages:

- High recognition accuracy, suitable for most daily speech tasks.

- Supports multilingual recognition and translation, offering comprehensive functionality.

- Provides a good balance when running on moderately powerful devices.

Medium Model

The Medium model has 769M parameters, more powerful and suitable for scenarios requiring high accuracy.

Advantages:

- High recognition accuracy, capable of handling complex speech environments.

- Supports multilingual recognition and translation, suitable for multilingual scenarios.

- Suitable for high-performance devices, providing an excellent experience.

Large-v2 Model

Large-v2 is an improved version of the Large model, still with 1550M parameters, enhancing performance through optimized training data and architecture.

Advantages:

- Higher recognition accuracy than the Large model.

- Optimized architecture improves inference efficiency.

- Suitable for scenarios requiring extremely high accuracy.

Large-v3 Model

Large-v3 is the latest version with 1550M parameters, further optimizing accuracy and multilingual support.

Advantages:

- Highest recognition accuracy, capable of handling extremely complex speech environments.

- Supports more languages and dialects, covering global applications.

- Optimized architecture improves inference speed and resource efficiency.

Large-v3-Turbo Model

The Turbo model is a distilled version of Large-v3 with 798M parameters, focusing on speed and efficiency.

Advantages:

- Inference speed is 8 times faster than Large-v3.

- GPU memory usage efficiency improved by 40%.

- Accuracy is only slightly reduced, suitable for scenarios requiring high speed and resource efficiency.

For guidance on model selection, refer to this recommendation.

Community Models

Community models come from the open-source community, and their quality and stability cannot be guaranteed. Please ensure you understand their specific implementation and applicable scenarios before use.

Community models are developed by community members, often fine-tuned and trained for specific languages, offering better performance and accuracy.

Audio Note integrates some community models into the software. You can choose to use these models within the software. If the existing community models do not meet your needs, you can also search for suitable models on Hugging Face and provide feedback. Audio Note will evaluate the feasibility of integrating these models.

Real-time Models

Real-time models currently support only a few languages: Chinese, English, and French.

Real-time models are a technology capable of recognizing speech signals in real-time, used in applications such as voice assistants and speech recognition. Audio Note supports real-time models, which you can select within the software.

Characteristics of real-time models:

- CPU-only, low performance requirements

- Supports bilingual recognition

- Fast recognition speed and high accuracy

As they are designed for real-time scenarios, recognition performance in complex speech environments may be slightly worse, and sentence segmentation may not be as accurate.

Contact us