📚 Documentation

Last updated: 2026-02-08GPU Transcription

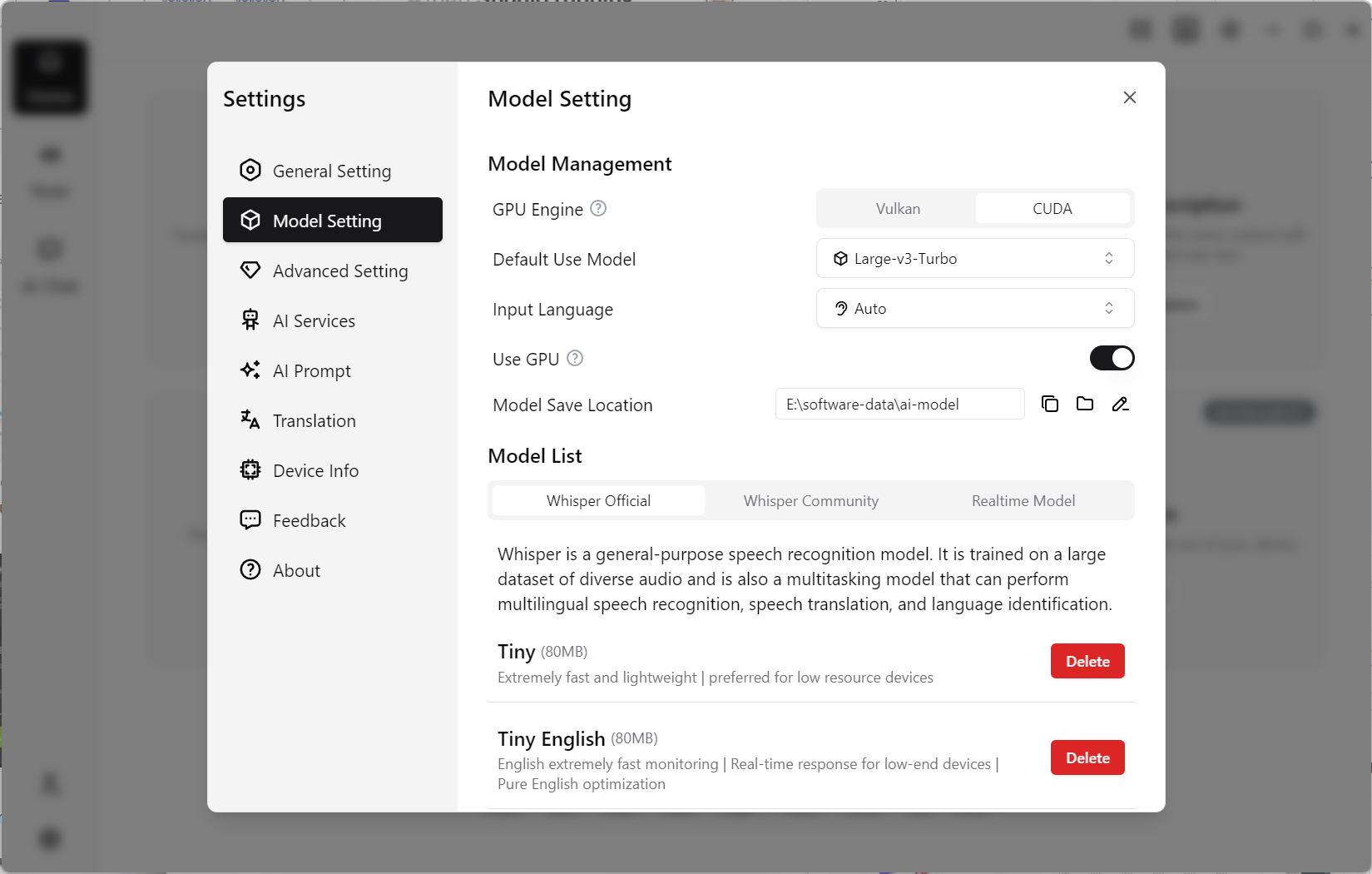

TODO (Screenshot Replacement): GPU engine selection page (App 2.0) Include: CUDA/Vulkan/CoreML options, detected hardware info, and fallback guidance. Suggested filename:

gpu-engine-settings-v2-en.png

Scope

GPU settings accelerate Whisper-style model inference. Current desktop engines:

- CUDA (Windows, NVIDIA)

- Vulkan (Windows, multi-vendor)

- CoreML (macOS, Apple devices)

Use Cases

- Long-form transcription jobs

- Medium/Large model workloads

- Throughput-focused batch processing

Steps

- Open

Settings > Transcription. - Select the GPU engine for your platform/hardware.

- Run CPU vs GPU comparison on the same media sample.

- Keep GPU as default only after confirming stability.

- If failures occur, fall back to CPU and check drivers/runtime dependencies.

Benchmark Tips

- Compare CPU vs GPU on the same file, model, and language settings.

- Measure both elapsed time and failure/retry rate.

- Validate short and long audio separately (benefits differ by duration).

Term Explanations

- Runtime libraries: backend dependencies required by specific GPU engines.

- VRAM: GPU memory; low VRAM often causes OOM or forced fallback.

- Fallback path: safe recovery route (usually CPU) when acceleration fails.

Real Scenario: Weekly Batch Meeting Processing

- Run one baseline task on CPU and record time/failure behavior.

- Run the same task on GPU with identical model/language settings.

- Roll out GPU defaults only if speed gains remain consistent and retry cost stays low.

This avoids false optimization where peak speed improves but real workflow reliability degrades.

Common Mistakes

- Mistake 1: Enabling GPU and immediately scaling full concurrency.

Fix: start with small batches, then increase load gradually. - Mistake 2: Judging compatibility by GPU model alone.

Fix: include driver/runtime/OS patch status in checks. - Mistake 3: Abandoning GPU after one failure.

Fix: keep CPU fallback for continuity, then diagnose backend issues incrementally.

FAQ

Q: CUDA or Vulkan on Windows?

A: Prefer CUDA on NVIDIA GPUs. Use Vulkan for non-NVIDIA hardware or compatibility fallback.

Q: Why no CUDA on macOS?

A: macOS acceleration path is CoreML/Metal-oriented.

Q: Is GPU always faster?

A: Usually for larger models and longer audio. Gains can be modest on short/light tasks.

Limitations

- Status: Stable (non-Beta); engine/driver compatibility still changes by OS updates.

- Engine availability depends on OS, GPU model, drivers, and app entitlements.

- CUDA may require additional runtime libraries on first use.

- Low-VRAM devices may fail or throttle under large models.

Whisper-Powered Live Transcription: Capture Speech from Mic, Apps & Media Files in Real Time

Contact us

Copyright © 2026. Made by AudioNote, All rights reserved.